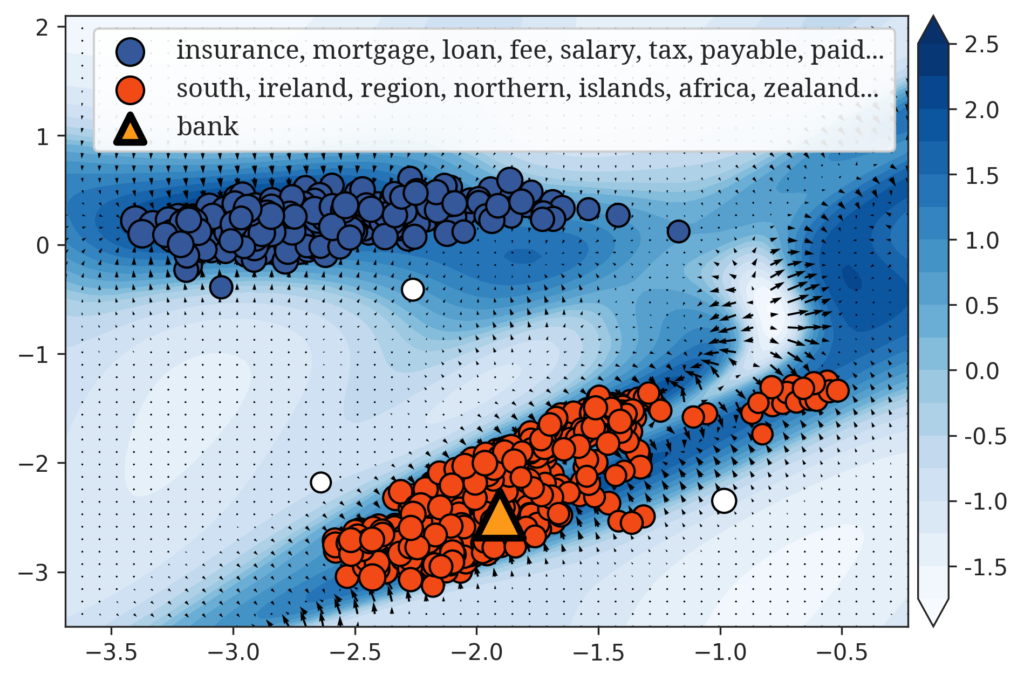

State-of-the-art word embedding methods represent a word with a single vector and presume a linear vector space, which does not easily incorporate nonlinearity that is necessary to, for example, represent polysemeous words (those having multiple meanings). We study alternative mathematical representation of language, such as the use of nonlinear functions and fields. We have proposed one formulation called FIRE (Reference) which beats BERT in an evaluation of counting the number of word senses.

References

- Xin Du and Kumiko Tanaka-Ishii. FIRE: Semantic Field of Words Represented as Non-Linear Functions Advances in Neural Information Processing Systems, 2022, 35: 37095-37107. [link]