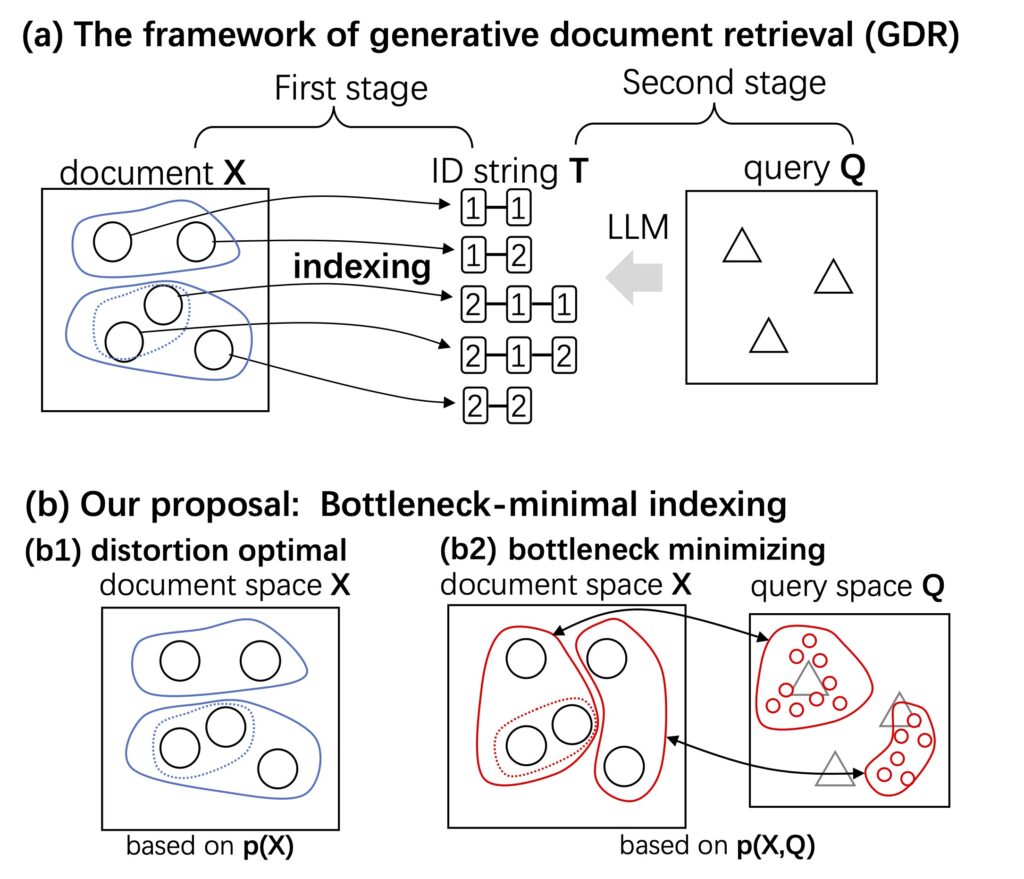

We apply an information-theoretic perspective to reconsider generative document retrieval (GDR), in which a document x∈X is indexed by t∈T, and a neural autoregressive model is trained to map queries Q to T. GDR can be considered to involve information transmission from documents X to queries Q, with the requirement to transmit more bits via the indexes T. By applying Shannon’s rate-distortion theory, the optimality of indexing can be analyzed in terms of the mutual information, and the design of the indexes T can then be regarded as a bottleneck in GDR. After reformulating GDR from this perspective, we empirically quantify the bottleneck underlying GDR. Finally, using the NQ320K and MARCO datasets, we evaluate our proposed bottleneck-minimal indexing method in comparison with various previous indexing methods, and we show that it outperforms those methods.

References

- Xin Du, Lixin Xiu, and Kumiko Tanaka-Ishii. Bottleneck-minimal indexing for generative document retrieval. In Proceedings of The Forty-first International Conference on Machine Learning (ICML). Vienna, Austria, 2024. [site]