Heading Large language models (LLMs) have achieved remarkable progress in naturallanguage generation, yet they continue to display puzzling behaviors—such asrepetition and incoherence—even when exhibiting low…

Machine learning

This paper proposes formulating Zipf’s meaning-frequency law, the power law between word frequency and the number of meanings, as a relationship between word frequency and…

Clustering is a fundamental technique in machine learning and data mining, offering a powerful lens to understand self-organizing patterns in the real world. At its…

This paper shows a novel machine learning model for realized volatility (RV) prediction using a normalizing flow, an invertible neural network. Since RV is known to…

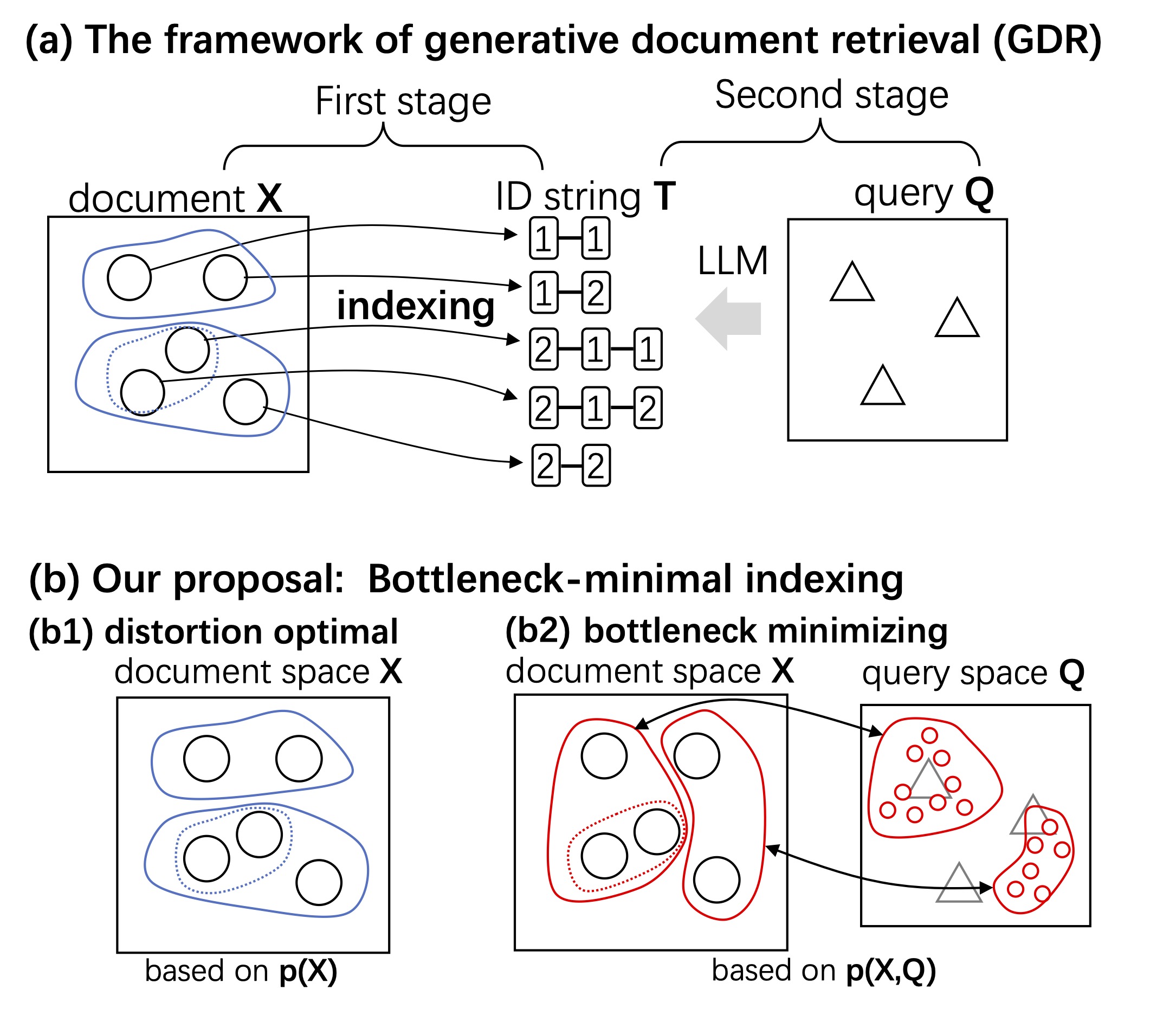

We apply an information-theoretic perspective to reconsider generative document retrieval (GDR), in which a document x∈X is indexed by t∈T, and a neural autoregressive model is…

Templates are multi-word expressions with slots, such as “Starting at _ on _ ” or “regard _ as _”, that appear frequently in text and…

A generative model is a mathematical formulation that generates a sample similar to real data. Many such models have been proposed using machine learning methods,…

For mathematical models of language, their potential, limitations, and ways of improvement are investigated in terms of whether they reproduce the complex properties of language….

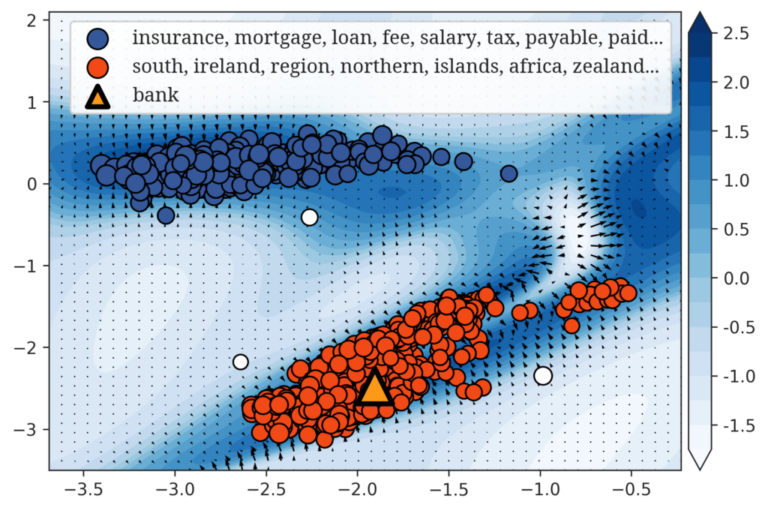

State-of-the-art word embedding methods represent a word with a single vector and presume a linear vector space, which does not easily incorporate nonlinearity that is…