Heading

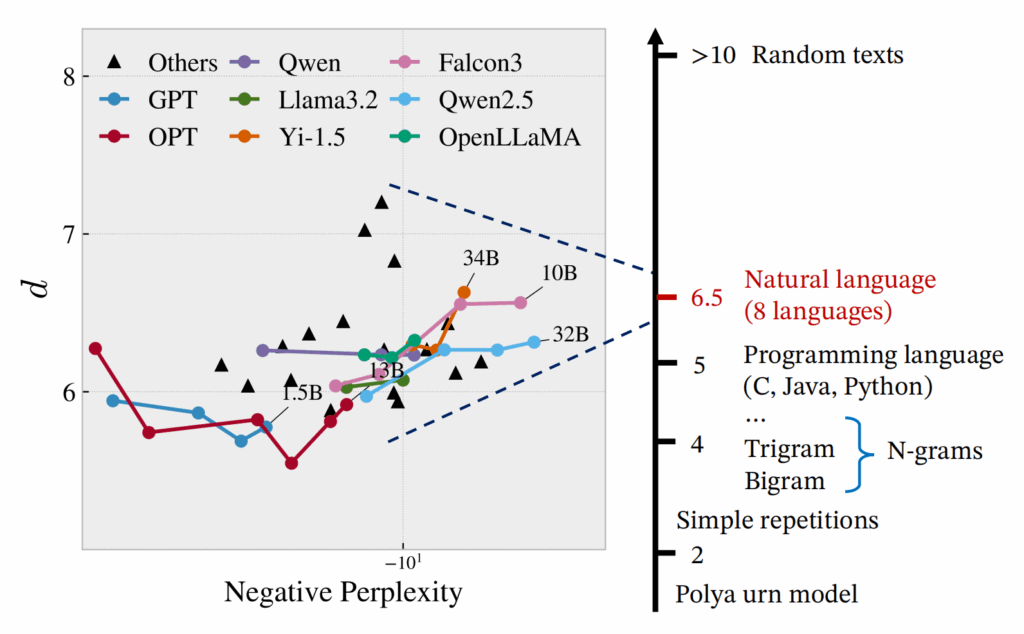

Large language models (LLMs) have achieved remarkable progress in naturallanguage generation, yet they continue to display puzzling behaviors—such asrepetition and incoherence—even when exhibiting low perplexity. Thishighlights a key limitation of conventional evaluation metrics, whichemphasize local prediction accuracy while overlooking long-range structuralcomplexity. We introduce correlation dimension, a fractal-geometric measureof self-similarity, to quantify the epistemological complexity of text asperceived by a language model. This measure captures the hierarchicalrecurrence structure of language, bridging local and global properties in aunified framework. Through extensive experiments, we show that correlationdimension (1) reveals three distinct phases during pretraining, (2) reflectscontext-dependent complexity, (3) indicates a model’s tendency towardhallucination, and (4) reliably detects multiple forms of degeneration ingenerated text. The method is computationally efficient, robust to modelquantization (down to 4-bit precision), broadly applicable acrossautoregressive architectures (e.g., Transformer and Mamba), and providesfresh insight into the generative dynamics of LLMs.

References

Du, X., & Tanaka-Ishii, K. (2025). Correlation Dimension of Auto-Regressive Large Language Models. arXiv preprint arXiv:2510.21258.[link]